Logistic regression is a simple yet effective way to predict binary outcomes, like yes/no or true/false, based on data features. It works by analyzing these features and calculating the probability that an outcome belongs to a certain class, using a sigmoid function. To get the best results, you need to scale your features properly, evaluate your model, and fine-tune it. Keep exploring to discover more details on making your logistic regression models perform their best.

Key Takeaways

- Logistic regression is used for binary classification tasks like spam detection or disease diagnosis.

- It predicts probabilities using a sigmoid function to determine class membership.

- Feature scaling, such as normalization, is essential for stable and efficient model training.

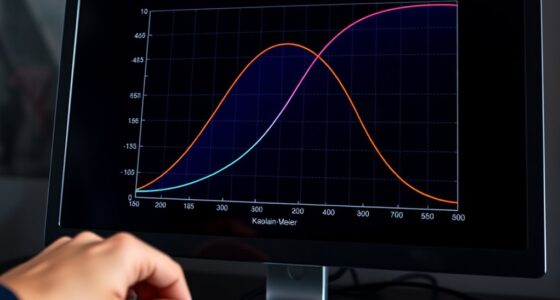

- Model evaluation involves metrics like accuracy, ROC curve, and AUC to assess performance.

- Proper data splitting, regular testing, and feature adjustments improve model robustness and accuracy.

Have you ever wondered how computers can predict whether an email is spam or if a patient has a certain disease? The answer lies in how they analyze data with techniques like logistic regression. This method helps you classify outcomes that are binary—either yes or no, true or false. But to make it work effectively, you need to understand some key steps, including feature scaling and model evaluation.

Feature scaling is vital because it guarantees all your input features are on the same scale. Imagine trying to compare the importance of a person’s age (say, 0 to 100) with their income (which could be thousands or millions). If you don’t scale these features, the model might give undue weight to the income simply because of its larger numerical values. Scaling methods like normalization or standardization adjust all features to a similar range, making the model training process more stable and faster. This is especially important with logistic regression because it relies on calculating probabilities through a sigmoid function, which can be sensitive to the scale of input data. Proper feature scaling helps the model converge more quickly and improves its overall performance.

Once you’ve trained your logistic regression model, evaluating its effectiveness is essential. Model evaluation involves testing how well your model predicts unseen data and checking its accuracy. You can use metrics like accuracy, precision, recall, and the F1-score to get a thorough view of its performance. For example, if you’re working on spam detection, you want to know not just how many emails are correctly classified but also how well the model identifies actual spam without flagging legitimate emails falsely. Additionally, you might look at the ROC curve and the area under the curve (AUC) to assess how well your model differentiates between the two classes across different thresholds. These evaluation techniques tell you whether your logistic regression model is reliable and whether it performs better than random guessing.

Furthermore, understanding the role of contrast ratio in model performance can help optimize your feature selection process, ensuring your model distinguishes effectively between classes in various scenarios.

In practice, you’ll split your data into training and testing sets, train your model on one, and evaluate it on the other. This helps prevent overfitting, where your model memorizes the training data but fails on new data. Regularly examining your model’s performance and fine-tuning it—perhaps by adjusting features or trying different scaling methods—ensures that your logistic regression remains accurate and robust. By properly scaling features and thoroughly evaluating your model, you’re setting yourself up for success in creating predictive systems that can handle real-world data confidently.

Frequently Asked Questions

How Does Logistic Regression Compare to Other Classification Algorithms?

When comparing classification algorithms, you’ll find logistic regression is straightforward, especially with its clear model assumptions like linearity and independence. It’s effective for binary problems and easy to interpret. However, you need to focus on feature selection to improve accuracy. Other algorithms, like decision trees or neural networks, may handle complex patterns better but can be less transparent. Your choice depends on your data, goals, and the importance of interpretability.

What Are the Common Pitfalls When Applying Logistic Regression?

Imagine you’re using logistic regression to predict customer churn. A common pitfall is model overfitting, where your model captures noise instead of true patterns, reducing its accuracy on new data. Another issue is feature multicollinearity, where correlated features distort the model’s estimates. To avoid these pitfalls, you should regularly validate your model, reduce redundant features, and keep it simple to enhance reliability and interpretability.

How Do I Interpret Coefficients in Logistic Regression?

You interpret coefficients by first considering their significance; if a coefficient is statistically significant, it impacts your model. The sign of the coefficient tells you the direction of the relationship, while the magnitude relates to the odds ratio interpretation. A positive coefficient means higher predictor values increase the odds of the outcome, and vice versa. Remember, exponentiating the coefficient gives you the odds ratio, making your interpretation clearer.

Can Logistic Regression Handle Multi-Class Classification?

Yes, logistic regression can handle multi-class classification through a multi-class extension called multinomial logistic regression. Instead of just binary outcomes, it uses the softmax function to model multiple classes simultaneously. You’ll interpret the coefficients as log-odds ratios for each class compared to a baseline. This approach allows you to predict, for example, whether an observation belongs to one of several categories, making it versatile for various real-world problems.

What Preprocessing Steps Are Essential Before Using Logistic Regression?

Imagine preparing ingredients before cooking; your data needs similar care. Before using logistic regression, you should perform feature scaling to standardize numerical features, ensuring they have comparable importance. Additionally, data encoding transforms categorical variables into numerical formats your model can understand. These steps help your model learn efficiently, avoid bias, and improve accuracy, making your predictions more reliable and insightful.

Conclusion

Now that you understand how logistic regression works, you’ll see it’s a powerful tool for classification tasks. Did you know that logistic regression is used in over 70% of machine learning applications in healthcare, helping to predict patient outcomes? With this simple method, you can make smarter decisions and build effective models quickly. Keep practicing, and you’ll master logistic regression in no time—making data-driven insights easier than ever!