The chi-square test of independence helps you see if two categorical variables are related or independent by comparing observed data to what you’d expect if there’s no connection. You organize your data in a contingency table and calculate expected counts based on the totals. Significant differences between observed and expected counts suggest a relationship. To understand how this works and its limitations, keep exploring the details behind this useful statistical tool.

Key Takeaways

- The Chi-Square Test of Independence assesses whether two categorical variables are related or independent.

- It compares observed frequencies in a contingency table to expected frequencies assuming independence.

- A significant result (low p-value) indicates a likely relationship between variables.

- Adequate sample size is essential; small samples can lead to unreliable conclusions.

- The test helps determine if observed associations are statistically significant or due to chance.

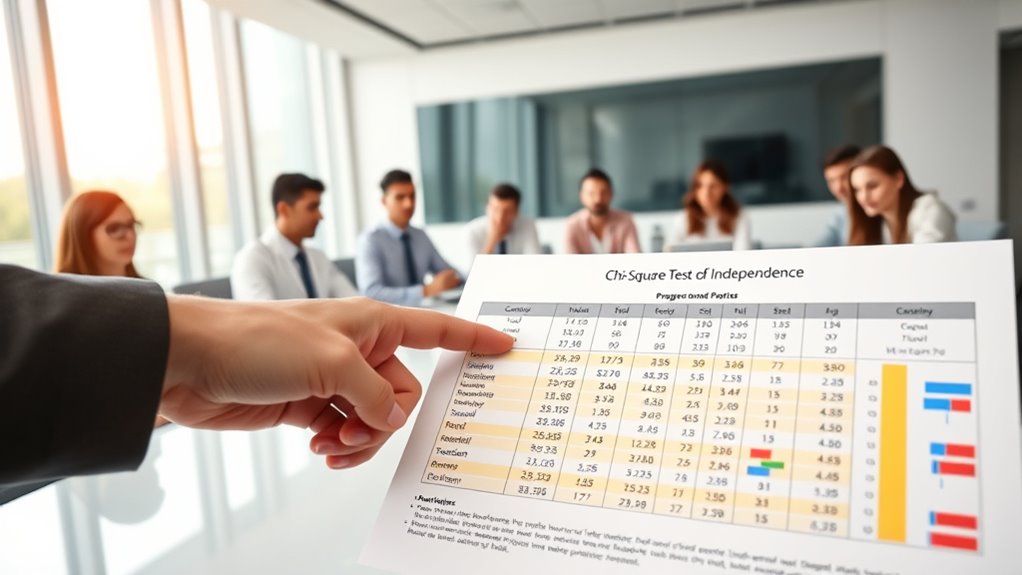

The Chi-Square Test of Independence is a statistical method used to determine whether two categorical variables are related or independent. When you want to analyze whether there’s a key relationship between two variables, this test provides a straightforward way to do so. To start, you need to gather your data in a contingency table, which displays the frequency counts of observations across different categories for both variables. This table is fundamental because it summarizes your data in a way that makes it easy to see patterns or potential associations. The sample size, or the total number of observations, plays a vital role here. A larger sample size generally increases the reliability of your results, reducing the likelihood that any observed association is due to random chance. Conversely, small samples can lead to misleading conclusions because the data may not accurately reflect the true relationship between variables.

Once you have your contingency table, the test compares the observed counts in each cell to the counts you would expect if the two variables are truly independent. These expected counts are calculated based on the assumption of independence, using the row and column totals and the overall sample size. If the observed counts considerably differ from the expected counts, it suggests that the variables are not independent — they are related in some way. The key to this comparison is the chi-square statistic, which quantifies the discrepancy between observed and expected counts across all cells. You then determine whether this discrepancy is statistically material by comparing the chi-square value to a critical value from the chi-square distribution table, considering your chosen significance level.

Interpreting the results involves understanding what the p-value indicates. A low p-value (usually below 0.05) suggests that the observed relationship is unlikely to have occurred by chance, leading you to reject the null hypothesis of independence. This means you have evidence to support a relationship between the two variables. On the other hand, a high p-value implies that any differences between observed and expected counts could be due to random variation, so you fail to reject the null hypothesis. Keep in mind, however, that the accuracy of this test depends on having a sufficiently large sample size. Small samples may violate the assumptions of the chi-square test, leading to unreliable results or the need for alternative tests. Additionally, understanding the vibrational energy involved in the context of the data can help inform interpretations of potential associations. In brief, the chi-square test of independence is a powerful tool that, when used correctly with appropriate sample sizes and well-organized contingency tables, helps you uncover whether two categorical variables are related or independent.

Frequently Asked Questions

Can the Chi-Square Test Be Used for Small Sample Sizes?

You can’t rely on the chi-square test for small sample sizes because it may jeopardize test validity. When your sample size is too small, the expected frequencies in cells might be too low, making the results unreliable. To guarantee accuracy, it’s better to use alternative tests like Fisher’s Exact Test for small samples. Always check your sample size and expected cell counts before applying the chi-square test to maintain validity.

How Do You Interpret the Results of a Chi-Square Test?

You see a p-value less than 0.05, so you know the results are statistically significant. This means you can reject the null hypothesis that the variables are independent. To interpret residuals, look for large positive or negative values, indicating where observed data deviates from expected. Residuals help you understand which specific categories contribute most to significance, making your interpretation of the chi-square test more meaningful.

What Are Common Mistakes to Avoid in Chi-Square Analysis?

When performing a chi-square analysis, avoid common mistakes like ignoring expected frequencies that should be at least 5 for valid results. Also, verify you correctly calculate degrees of freedom based on your contingency table, as miscalculations can lead to incorrect conclusions. Don’t forget to check that your sample size is adequate, and always interpret results in context, rather than solely relying on statistical significance.

Is the Chi-Square Test Suitable for Continuous Data?

You can’t use the chi-square test for continuous data because it requires categorical data, not continuous. The test also doesn’t meet parametric assumptions, which are necessary for many other statistical tests. If you try applying it to continuous data, your results will be invalid. Instead, convert your continuous data into categories or choose a different test, like a t-test or ANOVA, that suits continuous variables and meets parametric assumptions.

How Does the Chi-Square Test Compare to Other Correlation Tests?

You’ll find that the chi-square test focuses on categorical variables and is mainly used for independence testing, unlike correlation tests that measure the strength of a linear relationship between continuous variables. While correlation tests like Pearson’s assess how variables move together, the chi-square test determines if two categorical variables are independent. It’s ideal for analyzing frequency data, but it doesn’t quantify relationships like correlation coefficients do.

Conclusion

By understanding the chi-square test of independence, you can confidently analyze whether two categorical variables are related, avoiding common misconceptions about its complexity. While some might think it’s only suitable for large samples, it remains effective with smaller datasets when assumptions are met. Mastering this test empowers you to make informed decisions based on your data, enhancing your statistical skills and ensuring your interpretations are both accurate and meaningful.