A z-score shows how far a data point is from the average regarding standard deviations. If your z-score is positive, the value is above the mean; if negative, it’s below. For example, a z-score of 2 means it’s two standard deviations higher than the average. Z-scores make it easy to compare different sets of data and see if a value is unusual. Keep exploring to better understand how z-scores help interpret data.

Key Takeaways

- Z-scores show how far a data point is from the mean in terms of standard deviations.

- Standardization converts raw data into a common scale, making comparisons easier.

- A positive z-score indicates a value above the mean; a negative z-score indicates below.

- Z-scores help identify whether a data point is typical or rare within the distribution.

- They are useful for comparing different datasets and assessing the likelihood of specific values.

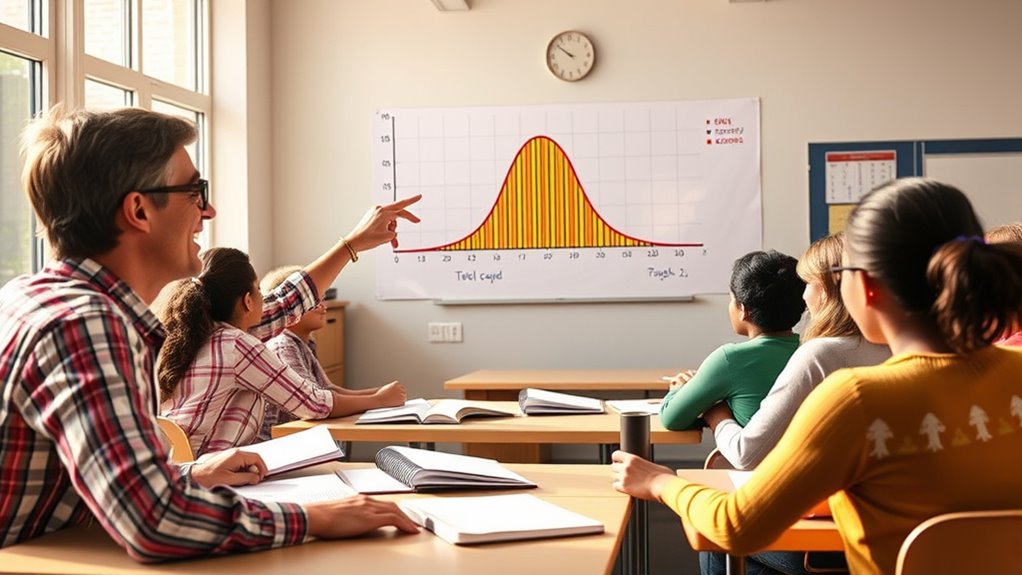

Z-scores are a critical statistical tool that help you understand how a specific data point compares to the overall distribution. When you work with data, it’s often helpful to see where a particular value falls relative to the rest. That’s where the standardization process comes into play. Standardization transforms your raw data into a common scale, making it easier to interpret and compare different data points. The key idea behind this process is converting raw scores into z-scores, which tell you how many standard deviations a point is from the mean. By doing so, you create a standardized scale where the properties of the normal distribution become particularly useful.

Understanding the properties of the normal distribution is essential because many datasets tend to follow this bell-shaped curve, especially with large samples. The normal distribution has well-known properties: it’s symmetric around the mean, with about 68% of data within one standard deviation, 95% within two, and 99.7% within three. Once your data is standardized into z-scores, you can leverage these properties to interpret where a specific data point lies in relation to the rest. For example, a z-score of 2 indicates that your data point is two standard deviations above the mean, which, under the normal distribution, is quite rare—happening about 2.5% of the time. Conversely, a z-score of -1.5 shows that the value is 1.5 standard deviations below the mean, which also helps you gauge its rarity or typicality.

The standardization process is straightforward. You subtract the mean from your raw score and then divide the result by the standard deviation. This calculation converts any raw data point into a z-score. Once standardized, you can compare data points from different datasets, even if they have different scales or units. This is especially useful in real-world scenarios, like comparing test scores from different exams or measuring performance across various metrics. Z-scores also allow you to identify outliers—data points that lie far from the mean—and assess the likelihood of their occurrence based on the normal distribution properties.

Frequently Asked Questions

How Do Z-Scores Relate to Standard Deviations?

Z-scores tell you how many standard deviations a data point is from the mean, helping you understand its position relative to the average. When you calculate a z-score, you’re measuring the mean difference between that data point and the mean, then dividing by the standard deviation. This process is essential for data normalization, allowing you to compare scores across different datasets or scales accurately.

Can Z-Scores Be Negative or Only Positive?

Z-scores can be negative or positive, depending on where your data point lies relative to the mean. A negative z-score indicates your data is below the average, while a positive z-score shows it’s above. In z-score interpretation, negative z scores help you understand how far and in which direction your data point deviates from the mean, making them essential for accurate analysis.

How Are Z-Scores Used in Real-World Data Analysis?

Think of z-scores as your data’s emotional compass, guiding you through the stormy seas of outlier detection and quality control. You use them to spot unusual data points that deviate from the norm, flagging potential errors or rare events. In real-world analysis, z-scores help you make informed decisions, improve processes, and guarantee consistency, turning raw numbers into meaningful stories about performance and quality.

What Assumptions Are Made When Calculating Z-Scores?

When calculating z-scores, you assume your sample is representative and follows a normal distribution, which are key sample assumptions. You also need the calculation prerequisites, like knowing the mean and standard deviation accurately. If these assumptions aren’t met, your z-scores might be misleading. Make sure your data is roughly symmetric and not heavily skewed, so your z-scores reflect true standard deviations from the mean.

Are Z-Scores Applicable to Non-Normal Distributions?

Imagine trying to fit a square peg into a round hole — z-scores aren’t ideal for non-normal distributions because their effectiveness depends on distribution shape. When data isn’t normal, z-scores can be misleading. Instead, you should consider alternative measures like percentile ranks or robust statistics that don’t rely on normality assumptions. These tools help you accurately interpret data, regardless of its distribution shape.

Conclusion

Think of a z-score as your personal compass, guiding you through the vast landscape of data. It tells you how far you’ve traveled from the average, like a lighthouse shining through fog. By understanding z-scores, you gain the confidence to navigate any dataset with clarity. Remember, they’re not just numbers—they’re your map to understanding how you or others fit into the bigger picture. Embrace this tool, and let it lead you to clearer insights.