Neural network interpretability helps you understand how inputs influence model outputs, building trust and transparency. Start with feature attribution methods like Integrated Gradients, SHAP, or LIME to see what drives predictions. These techniques highlight important features and reveal the inner workings of your model. By applying them, you can debug, improve, and explain your neural network more effectively. Keep exploring to discover how these tools make AI more transparent and reliable.

Key Takeaways

- Overview of popular feature attribution methods like Integrated Gradients, SHAP, and LIME for neural network interpretability.

- Step-by-step guidance on analyzing trained models to identify feature importance and influence.

- Techniques for visualizing attribution scores to understand model decision-making processes.

- Best practices for validating explanations and ensuring model focus on relevant features.

- Tips for integrating interpretability tools into development workflows to improve transparency and trust.

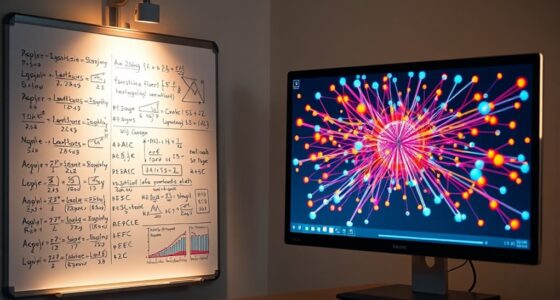

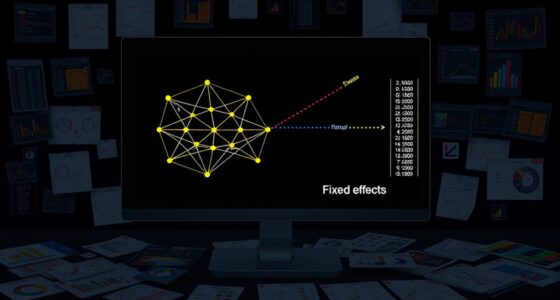

Neural network interpretability is essential for understanding how these complex models make decisions, especially as they become more integrated into critical applications. When working with neural networks, you need to grasp not just the outputs but also the reasoning behind them. This is where model transparency plays a pivotal role. By making the inner workings of the model more accessible, you can better trust its predictions and identify potential biases or errors. Model transparency involves techniques that reveal how inputs influence outputs, giving you a clearer picture of what the model “sees” as important. This process often involves feature attribution methods, which assign importance scores to individual features, helping you understand their contribution to the model’s decision-making.

Understanding model transparency helps trust neural network decisions and identify biases through feature importance.

Feature attribution is a key tool in enhancing model transparency. It allows you to pinpoint exactly which features drive the model’s predictions, making it easier to interpret complex neural networks. For example, if you’re working with image data, feature attribution might highlight specific pixels or regions that influence the output. In tabular data, it could reveal which variables most impact the result. These insights help you validate whether the model is focusing on relevant aspects of the data or being misled by spurious correlations. Techniques like Integrated Gradients, SHAP, or LIME provide you with these attribution scores, offering a more understandable view of the model’s behavior.

To get started with neural network interpretability, you should first familiarize yourself with these techniques. Applying them involves analyzing your trained model and examining the feature importance scores across different inputs. This process is often iterative—you might discover that your model overly depends on certain features or ignores others, prompting you to refine your approach. By doing so, you improve the model’s transparency, making it easier to explain its decisions to stakeholders or regulators. Additionally, understanding how model interpretability techniques are used in practice can help you better communicate your findings and build trust with end-users.

Understanding feature attribution also helps in debugging and improving your neural network. If you notice that the model attributes importance to irrelevant features, you can take steps to address this, such as feature engineering or regularization. Ultimately, increasing model transparency through feature attribution empowers you to build more reliable and trustworthy neural networks, especially in applications where interpretability isn’t just a preference but a necessity. As you advance, combining these techniques will give you a thorough toolkit to interpret even the most complex models effectively and efficiently.

Frequently Asked Questions

How Can Interpretability Improve Neural Network Deployment in Real-World Applications?

Understanding how interpretability enhances neural network deployment helps you see its benefits clearly. By enhancing model transparency, you allow users to comprehend how decisions are made, which builds their trust. When users trust the system, they’re more likely to rely on it, making deployment smoother and more effective. So, focusing on interpretability ensures your neural network is transparent, trustworthy, and better accepted in real-world applications.

What Are the Limitations of Current Interpretability Techniques?

You should know that current interpretability techniques face limitations, especially with black box models, making it hard to fully understand how decisions are made. They often fall short in detecting bias accurately, risking overlooked unfairness. While tools like feature importance help, they don’t always reveal complex interactions. Consequently, these limitations hinder trust and accountability, meaning you need to combine methods and stay cautious about overreliance on interpretability alone.

How Does Interpretability Impact Model Performance and Accuracy?

Think of interpretability like a window into a machine’s mind—it’s essential for understanding how your model makes decisions. When you prioritize transparency, you can detect biases early and improve accuracy. Clear interpretability enhances model transparency, which helps you refine features and reduce errors. Ultimately, it boosts confidence in your model’s predictions, ensuring it performs well across diverse data, and makes biases easier to identify and correct.

Are There Specific Tools Recommended for Interpretability Analysis?

You should explore tools like LIME and SHAP for interpretability analysis, as they excel in model visualization and feature attribution. These tools help you understand how different features influence your model’s predictions, making it easier to identify potential biases or errors. By using them, you gain clearer insights into your model’s decision-making process, ultimately improving trust and performance. They’re essential for transparent, reliable AI development.

How Can Interpretability Methods Be Integrated Into the Development Pipeline?

While developing your models, integrating interpretability methods isn’t just about transparency—it’s essential for effective model debugging and stakeholder communication. You can embed tools like feature importance and layer-wise relevance propagation into your pipeline, ensuring insights are available during development and review phases. This proactive approach helps identify issues early and clearly conveys model decisions, making your workflow more robust and your stakeholder reports more compelling.

Conclusion

By understanding neural network interpretability, you gain clarity amid complexity. While models become more transparent, their inner workings still challenge your intuition. You might think that clarity equals simplicity, but often, it’s the nuanced insights that reveal the true power of your AI. Embrace the paradox: the more you decipher, the more you realize how much remains mysterious. In this dance between transparency and complexity, you uncover not just answers, but a deeper appreciation for AI’s true potential.