Bayesian inference helps you update your beliefs as new evidence comes in. You start with an initial guess, called a prior, and then combine it with the data you gather using a mathematical rule known as Bayes’ theorem. This process produces a more accurate belief, called a posterior. By repeatedly applying this, you refine your understanding over time. Keep exploring, and you’ll uncover how this approach can guide smart, evidence-based decisions.

Key Takeaways

- Bayesian inference updates beliefs systematically by combining prior knowledge with new evidence using probabilities.

- It uses Bayes’ theorem to compute the posterior, reflecting an improved understanding after considering data.

- The process is iterative, allowing continuous refinement as new data becomes available.

- It effectively manages uncertainty and scarce data, making it valuable in fields like medicine, finance, and machine learning.

- Bayesian inference treats probability as a measure of belief that evolves, promoting an adaptable, evidence-driven approach to decision-making.

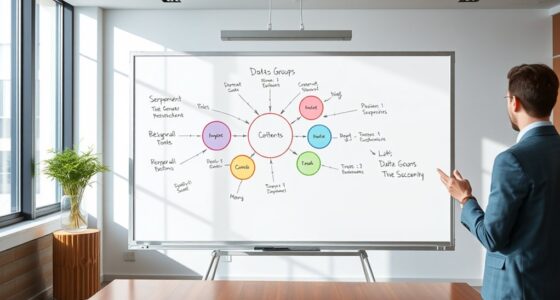

Have you ever wondered how scientists update their beliefs in light of new evidence? This process is at the heart of Bayesian inference, a powerful method for making sense of uncertain information. It begins with the idea of prior updating, where you start with a prior belief about a hypothesis or parameter. Think of this prior as your initial guess, shaped by previous knowledge, experience, or assumptions. When new data arrives, instead of discarding your initial belief, you refine it through a process called posterior analysis. This approach allows you to incorporate fresh evidence systematically, updating your confidence in a logical, probabilistic way.

Bayesian inference updates beliefs systematically by combining prior knowledge with new evidence.

In simple terms, prior updating involves combining what you initially believe with the new evidence to get a clearer picture. You begin with a prior probability, which encapsulates your initial stance. As data is collected, you perform a calculation that accounts for how likely this data is, given your prior belief. This likelihood helps you adjust your initial estimate, leading to a posterior probability. The posterior is fundamentally a new, improved belief that reflects both what you initially thought and what the evidence suggests. This iterative process can be repeated as new data becomes available, continually refining your understanding.

The core of Bayesian inference lies in this posterior analysis. By applying Bayes’ theorem, you mathematically combine the prior and the likelihood of the observed data. Bayes’ theorem acts as a rule for updating probabilities, ensuring that your new belief—the posterior—accurately represents the totality of the evidence. This process is especially useful when data is scarce or uncertain because it allows you to weigh prior knowledge against new information appropriately. Over time, as more evidence accumulates, your posterior becomes more precise, guiding your decisions and predictions with increasing confidence.

You might find that Bayesian inference offers a more intuitive way to think about uncertainty compared to traditional methods. Instead of relying on fixed point estimates or binary yes-or-no conclusions, it treats probability as a measure of belief that evolves. This dynamic aspect makes it particularly valuable in fields like medicine, finance, and machine learning, where information is often incomplete or noisy. By understanding prior updating and engaging in posterior analysis, you can approach complex problems with a flexible, evidence-based mindset, continuously refining your beliefs in light of new data.

In essence, Bayesian inference empowers you to be a more adaptable thinker. It’s not about having all the answers upfront but about updating your understanding as new evidence comes in, making your conclusions more reliable and grounded in reality.

Frequently Asked Questions

How Does Bayesian Inference Compare to Frequentist Methods?

When comparing Bayesian inference to frequentist methods, you’ll notice that Bayesian approaches incorporate prior beliefs, making them sensitive to prior choices, especially with limited data. Unlike frequentist methods, which focus on long-term frequency, Bayesian models handle complexity more flexibly through priors. You might find Bayesian inference more adaptable for complex models, but it requires careful consideration of prior sensitivity to avoid biased results.

Can Bayesian Methods Handle Large Datasets Efficiently?

Imagine trying to fit a giant puzzle—Bayesian methods face similar scalability challenges with large datasets. While they offer rich insights, computational efficiency can slow down as data size grows. You may find that advanced algorithms and approximations help, making Bayesian approaches more practical for big data. Still, balancing accuracy with speed remains key, and ongoing innovations aim to improve their scalability to handle vast datasets more effectively.

What Are Common Pitfalls in Applying Bayesian Inference?

When applying Bayesian inference, you should watch out for common pitfalls like model misspecification, which can lead to inaccurate results if your model doesn’t match reality. Additionally, prior sensitivity can skew your outcomes, especially if your priors are too strong or poorly chosen. You might also underestimate uncertainty or overlook convergence issues. To avoid these, carefully validate your model, test different priors, and check your sampling methods.

How Do Priors Influence the Results Significantly?

When considering how priors influence results, you should recognize that prior sensitivity can critically impact your conclusions, especially if the prior selection is too informative or biased. Your choice of priors shapes the initial beliefs, which then update with data. If your priors are poorly chosen, they can skew results, making it essential to carefully assess and justify your prior selection to guarantee balanced, reliable inferences.

Is Bayesian Inference Suitable for Real-Time Decision-Making?

You might wonder if Bayesian inference fits real-time decision-making. It can be suitable if you manage its computational complexity and data scalability. While Bayesian methods provide robust, probabilistic insights, they often require intensive calculations, which could slow down your decisions. However, with optimized algorithms and scalable data handling, you can implement Bayesian inference effectively in real-time scenarios, making quick, informed choices based on evolving data.

Conclusion

Think of Bayesian inference as a compass guiding you through the fog of uncertainty. With each new piece of evidence, you adjust your direction, steadily honing in on the truth. Like a lighthouse illuminating the way, it brightens your understanding and clears the shadows of doubt. Embrace this journey, knowing that each update is a step closer to clarity. Trust the process, and let your curiosity steer you toward knowledge’s horizon.