In this RapidMiner fast-track tutorial, you’ll quickly learn how to transform raw data into meaningful insights using intuitive drag-and-drop features. You’ll explore various visualization tools like bar charts and scatter plots to interpret your analysis results effectively. The tutorial also guides you through deploying models seamlessly, enabling you to act on insights faster. Keep going, and you’ll discover how to streamline your data workflow for rapid decision-making.

Key Takeaways

- Explore RapidMiner’s drag-and-drop interface for quick data visualization and analysis setup.

- Learn to create common visualizations like bar charts and scatter plots to interpret data effectively.

- Follow step-by-step instructions for building and validating predictive models efficiently.

- Discover deployment options such as APIs or embedded applications to activate insights rapidly.

- Utilize interactive tools for refining models and visualizations to accelerate data-driven decision-making.

Are you looking to quickly master data analysis with RapidMiner? If so, you’ll find that understanding how to effectively utilize data visualization and model deployment is essential to your success. RapidMiner’s intuitive interface allows you to transform raw data into meaningful insights swiftly, but knowing how to visualize that data clearly can make all the difference. When you create compelling data visualizations, you can better identify patterns, trends, and outliers, making your analysis more insightful and actionable. As you build your models, consider how visual tools like charts and graphs can help you interpret results more efficiently, leading to better decision-making. Additionally, a solid grasp of model accuracy and how it impacts your results will help you refine your analyses for greater reliability.

Master data analysis quickly with RapidMiner by mastering visualization and model deployment techniques.

Once you’ve developed a robust model, deploying it efficiently becomes your next goal. RapidMiner simplifies model deployment by integrating seamlessly with various platforms, enabling you to put your analytics into production quickly. Whether you’re deploying models into a web service, embedding them into applications, or integrating with existing systems, RapidMiner provides streamlined options that save you time. By focusing on model deployment early, you ensure that your insights are not just theoretical but are actively used to inform real-world business decisions. This end-to-end approach helps you maximize the value of your data analysis efforts.

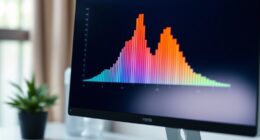

Getting started with data visualization in RapidMiner involves selecting the right visual components to represent your data accurately. You can create bar charts, scatter plots, histograms, and more—all with minimal effort, thanks to the drag-and-drop interface. These visualizations help you communicate your findings clearly to stakeholders, regardless of their technical background. Furthermore, RapidMiner’s visualization tools are interactive, allowing you to drill down into details or adjust parameters on the fly, which aids in refining your analysis. As you become more familiar with these features, you’ll find that visual storytelling becomes an integral part of your data analysis process.

Model deployment is equally accessible in RapidMiner. Once your model is trained and validated, you can deploy it directly from the platform without needing extensive coding. RapidMiner provides options to export models as APIs, deploy them to cloud services, or embed them into existing applications. This flexibility means you can deliver your insights rapidly and respond to changing business needs without delay. By mastering both data visualization and model deployment, you position yourself to not only analyze data effectively but also to turn those analyses into tangible, actionable solutions that drive results.

Frequently Asked Questions

Is Rapidminer Suitable for Beginners With No Coding Experience?

Yes, RapidMiner is suitable for beginners with no coding experience. Its visual programming approach and drag-and-drop interface make it easy to learn and use. You can build data workflows intuitively, without needing to write code. This user-friendly design helps you focus on understanding data analysis concepts while quickly creating models. So, even if you’re new, RapidMiner provides a smooth entry into data science and machine learning.

Can Rapidminer Handle Large-Scale Data Processing Efficiently?

You wonder if RapidMiner can handle large-scale data processing efficiently. It generally manages scalability issues well, thanks to its robust architecture. However, your experience depends on your hardware and data complexity. RapidMiner’s data throughput can be high, but very large datasets may cause performance bottlenecks if not optimized. To guarantee smooth processing, consider hardware upgrades or data sampling techniques, especially when working with extensive datasets.

What Are the System Requirements to Run Rapidminer Smoothly?

When it comes to running RapidMiner smoothly, you need to guarantee your hardware specifications are up to snuff and that software compatibility is spot-on. Think of it as a well-oiled machine; the better your hardware and software sync, the fewer bumps in the road you’ll encounter. Aim for a modern processor, ample RAM, and a compatible operating system like Windows or Linux. This way, you’ll keep things running like clockwork.

Does Rapidminer Integrate With Other Data Analysis Tools or Languages?

RapidMiner offers robust integration capabilities, allowing you to connect with various data analysis tools and platforms easily. It supports scripting interoperability through languages like Python and R, enabling you to extend its functionality and automate workflows. This flexibility helps you streamline your data processes, combine multiple tools, and customize analyses, making RapidMiner a versatile choice for diverse data science projects.

How Often Are Rapidminer Updates and New Features Released?

Think of RapidMiner as a well-tuned engine, constantly running smoothly. Its update frequency varies, with major releases typically every few months and smaller patches more often. The feature release schedule keeps pace with user needs, ensuring you get new tools and improvements regularly. Staying current means you can leverage the latest capabilities, helping you analyze data more effectively and efficiently.

Conclusion

By following this quick guide, you’re opening doors to a world of data possibilities. While the journey may have its gentle twists, each step brings you closer to uncovering insights that can truly make a difference. Remember, every expert was once a beginner, and your dedication will gradually turn challenges into confidence. Keep exploring, stay curious, and trust that with patience, your skills will flourish beyond what you imagined.