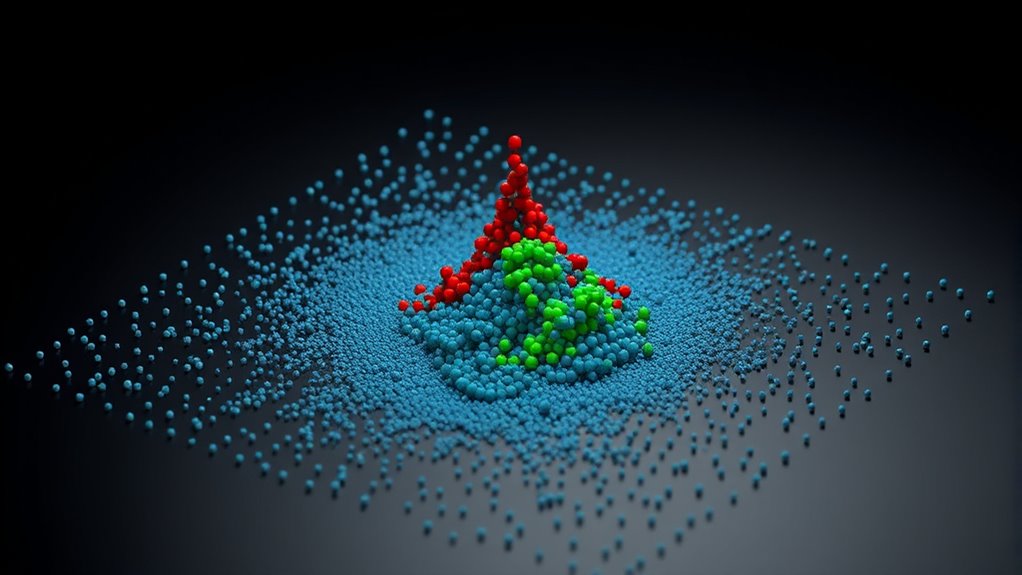

Outliers are data points that stand out sharply from the rest of your dataset, and spotting them is vital because they can distort your analyses. They can skew measures like the mean, inflate variability, and hide true patterns, leading to misleading conclusions. To handle them, you can use detection methods like visualization or statistical tests and apply strategies like transformations or robust analysis. Understanding their impact helps you make more accurate interpretations—if you keep exploring, you’ll find ways to deal with them effectively.

Key Takeaways

- Outliers are data points that significantly deviate from the overall dataset, potentially skewing analysis results.

- Detecting outliers involves methods like Z-scores, boxplots, and robust statistical tests to identify unusual observations.

- Outliers can distort measures of central tendency and inflate variability, leading to misleading conclusions.

- Handling outliers through removal, transformation, or robust methods helps ensure accurate statistical modeling.

- Proper detection and management of outliers are essential for reliable analysis and meaningful interpretation of data.

Outliers are data points that deviate markedly from the rest of your dataset, and they can have a profound effect on your statistical analysis. These exceptional values can distort measures like the mean, inflate variance, and obscure true patterns within your data. To handle this, you need to identify outliers accurately and decide how to address them effectively. One approach involves employing robust methods that are less sensitive to extreme values, ensuring your analysis reflects the core data trend without being skewed by outliers. Robust statistical techniques, such as median-based measures or robust regression, provide reliable estimates even when outliers are present, helping you maintain analytical integrity.

Another essential strategy is data transformation, which can mitigate the influence of outliers by modifying the data’s scale or distribution. Logarithmic, square root, or Box-Cox transformations are common choices that compress large values and stretch smaller ones, reducing the impact of outliers and making patterns easier to detect. When you transform your data, you often reveal underlying relationships that were obscured by extreme values, leading to more accurate models and insights. However, you should be cautious with transformations, ensuring they suit your data and analysis goals. Sometimes, a simple transformation suffices, while other times, more sophisticated techniques are necessary. Additionally, understanding the regional context of your data, such as divorce statistics or local demographic factors, can help interpret whether outliers are meaningful or anomalies needing adjustment.

Frequently Asked Questions

How Do Outliers Affect Machine Learning Model Performance?

Outliers can considerably skew your machine learning model’s performance by affecting accuracy and generalization. They may cause your model to learn noise instead of true patterns, leading to poor predictions. To mitigate this, you should use robust algorithms that handle outliers well and apply data normalization to reduce their influence. Identifying and managing outliers ensures your model stays accurate, reliable, and better at making predictions on unseen data.

What Are the Best Software Tools for Outlier Detection?

You can rely on tools like R, Python, and MATLAB for outlier detection. For example, R’s robust statistical methods, such as the IQR or Z-score, help identify anomalies, while visualization techniques like box plots quickly reveal outliers. Python’s libraries like Scikit-learn and PyOD also excel in this area. These tools empower you to spot outliers effectively, ensuring your data analysis remains accurate and reliable.

Can Outliers Indicate Data Collection Errors?

Yes, outliers can indicate data collection errors, such as data entry mistakes or measurement inaccuracies. When you spot outliers, you should review your data carefully, checking for possible entry errors or faulty measurements. These anomalies often signal issues that need correction before analysis. Addressing potential errors helps make certain your results are accurate and reliable, preventing skewed conclusions caused by incorrect data points.

How Should Outliers Be Handled in Time-Series Data?

You should handle outliers in time-series data by first using anomaly visualization to identify unusual patterns. Then, consider robust modeling techniques that reduce the influence of outliers, like median-based methods or trimming. If outliers result from data errors, correct or remove them. For genuine anomalies, decide whether to model them explicitly or treat them as special cases to improve your analysis accuracy and forecast reliability.

Are Outliers Always Harmful to Statistical Analysis?

Outliers aren’t always harmful to your statistical analysis; sometimes, they reveal important insights or rare events. To minimize their negative effects, you can use robust statistical methods that are less sensitive to extreme values. Data cleansing techniques, like winsorizing or transformation, help manage outliers effectively. By applying these strategies, you can guarantee your analysis remains accurate without dismissing potentially valuable information outliers might hold.

Conclusion

Just as Sherlock Holmes uncovers hidden clues, detecting outliers reveals deeper truths in your data. Ignoring these anomalies can distort your analysis, leading you astray like a ship lost at sea. By actively identifying and understanding outliers, you guarantee your conclusions are as sharp as a detective’s intuition. Remember, every outlier is a clue—embrace them, and your statistical journey becomes clearer, guiding you toward more accurate, meaningful insights.