The Central Limit Theorem shows that no matter what your original data looks like, the averages of large samples tend to form a normal (bell-shaped) curve. This means that with bigger samples, it’s easier to predict and analyze data using standard methods. It helps you understand patterns and make accurate estimates. Keep exploring to see how this powerful idea makes handling real-world data much simpler and more reliable.

Key Takeaways

- The Central Limit Theorem states that the distribution of sample means becomes approximately normal as sample size increases.

- Regardless of the original data distribution, large enough samples produce a nearly normal sampling distribution.

- It allows us to use normal distribution properties to estimate probabilities and confidence intervals for sample means.

- Larger sample sizes (usually 30 or more) improve the accuracy of the normal approximation.

- This theorem simplifies complex data analysis by enabling normal-based inference on various datasets.

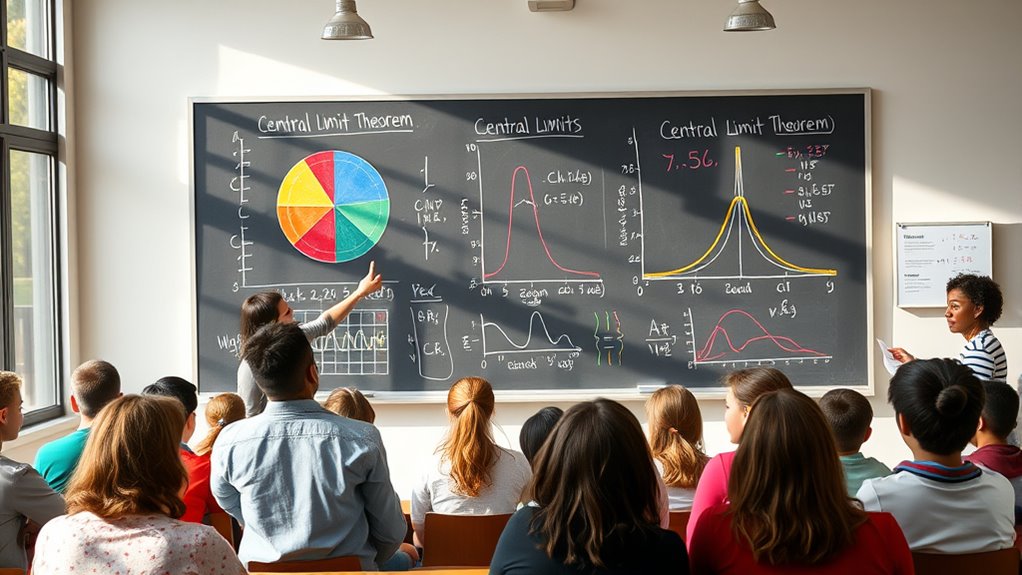

The Central Limit Theorem is a fundamental concept in statistics that explains why the distribution of sample means tends to be normal, regardless of the original data‘s distribution. When you collect multiple samples from a population, each sample produces a mean. The collection of these means forms a sampling distribution, which reveals how the averages behave across different samples. No matter how skewed or irregular the original data might be, the sampling distribution of the sample means will become increasingly bell-shaped as the sample size grows. This phenomenon allows you to apply the normal approximation to analyze data more easily, even if the raw data isn’t normally distributed. Additionally, the sampling distribution itself is a key idea that underpins the theorem’s power and usefulness in statistical inference.

Understanding the sampling distribution is key here. It’s fundamentally a distribution of all possible sample means you could get from the population, assuming you take many samples of a specific size. The Central Limit Theorem tells you that, with a sufficiently large sample size, this distribution will be approximately normal. This approximation becomes more accurate as your sample size increases, often around 30 or more, but sometimes even smaller sizes work if the original data isn’t too skewed. This normal approximation makes it easier to calculate probabilities or confidence intervals because you can rely on well-known properties of the normal distribution.

The sampling distribution of sample means approaches normality as sample size increases.

When you use the normal approximation, you’re simplifying the process of inference. Instead of dealing with complex, unknown distributions, you can leverage the familiar properties of the normal distribution. For example, if you want to determine how likely it is to observe a sample mean within a certain range, you can standardize your value using the mean and standard deviation of the sampling distribution. This standardization transforms the problem into a z-score, which you can then compare to the standard normal distribution. Because the sampling distribution is approximately normal, this process yields accurate probability estimates, making your statistical analysis more straightforward and reliable.

In practical terms, the Central Limit Theorem empowers you to work with sample means confidently, knowing that they tend to follow a predictable, bell-shaped pattern. Whether you’re estimating population parameters, testing hypotheses, or constructing confidence intervals, the normal approximation offers a solid foundation. It’s the reason why many statistical methods rely on the assumption of normality, even when the raw data isn’t normal. As you gather larger samples, you’ll notice this pattern more clearly, and your ability to make accurate inferences improves. The beauty of the Central Limit Theorem is that it bridges the gap between real-world, often messy data and the neat, mathematical world of normal distributions.

Frequently Asked Questions

How Does Sample Size Affect the Clt’s Accuracy?

You might wonder how sample size impacts the CLT’s accuracy. As your sample size increases, the convergence rate to a normal distribution improves, making the theorem more precise. Larger samples reduce variability and better represent the population, so you can trust the normal approximation more. Conversely, small samples lead to slower convergence, decreasing accuracy and potentially misrepresenting the true distribution. In short, bigger sample sizes enhance the CLT’s reliability.

Can CLT Be Applied to Non-Numeric Data?

You might wonder if the CLT applies to non-numeric data like categorical variables. While CLT focuses on numeric data and sums or averages, it doesn’t directly work with categorical data. However, you can analyze proportions or frequencies of categories. For non-numeric variables, consider using methods like chi-square tests instead. So, CLT isn’t suited for categorical data, but similar principles help analyze non-numeric variables through different statistical techniques.

What Are Common Misconceptions About the CLT?

You might think the CLT is foolproof, but common misconceptions include believing it always applies perfectly, ignoring sampling errors, or assuming the distribution shape doesn’t matter. In reality, the CLT assumes large sample sizes and specific conditions; small samples or skewed distributions can lead to misleading results. Don’t forget, understanding the distribution shape helps you accurately interpret how well the CLT works in your situation.

How Does the CLT Relate to Real-World Data Analysis?

You’re about to uncover the secret weapon in data analysis—understanding how the CLT transforms chaos into clarity. It shows you that, despite sampling variability, the average of many samples reliably predicts the true population mean. This helps you make smarter data predictions, even with small samples. The CLT’s power means you can confidently analyze real-world data, knowing that large enough samples will reveal the underlying truth amid variability.

Are There Exceptions Where the CLT Doesn’t Hold True?

Rare cases exist where the CLT doesn’t hold true, especially when data aren’t independent or identically distributed. If your data points are correlated or have heavy tails, the theorem’s assumptions break down, and the sample means may not follow a normal distribution. In such situations, you need to use alternative methods or special versions of the CLT to accurately analyze your data.

Conclusion

Now you see how the Central Limit Theorem simplifies understanding data. It shows how sample averages, no matter the original distribution, tend to follow a normal pattern. It turns complex randomness into predictable patterns, making analysis easier. It helps you grasp variability, predict outcomes, and make informed decisions. With this theorem, you can approach data confidently, knowing that, in many cases, the distribution will behave predictably. Embrace its power, and reveal clarity in your data analysis journey.