Parametric and nonparametric methods differ mainly in their assumptions about data. Parametric techniques assume your data follows a specific distribution, like normal, and are efficient when these assumptions are met. Nonparametric methods don’t rely on such assumptions and are more flexible, making them better for skewed or messy data. If you want to understand when to choose each approach and how they impact your analysis, exploring further can give you a clearer picture.

Key Takeaways

- Parametric methods rely on specific distribution assumptions, while nonparametric methods do not depend on such assumptions.

- Parametric models are fixed in form and more efficient if assumptions hold; nonparametric methods are more flexible and adaptable.

- Nonparametric techniques are robust to outliers and skewed data, whereas parametric methods may be sensitive to assumption violations.

- Parametric tests like t-tests and ANOVA are generally more powerful with proper assumptions; nonparametrics may require larger samples.

- The choice depends on data characteristics and research goals, balancing assumptions, flexibility, and robustness.

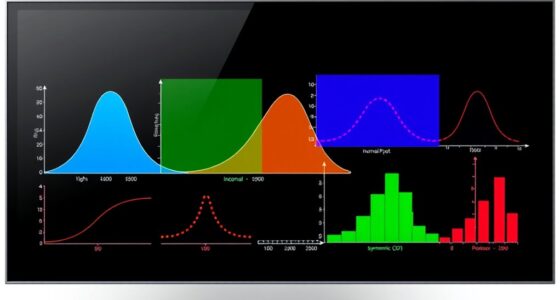

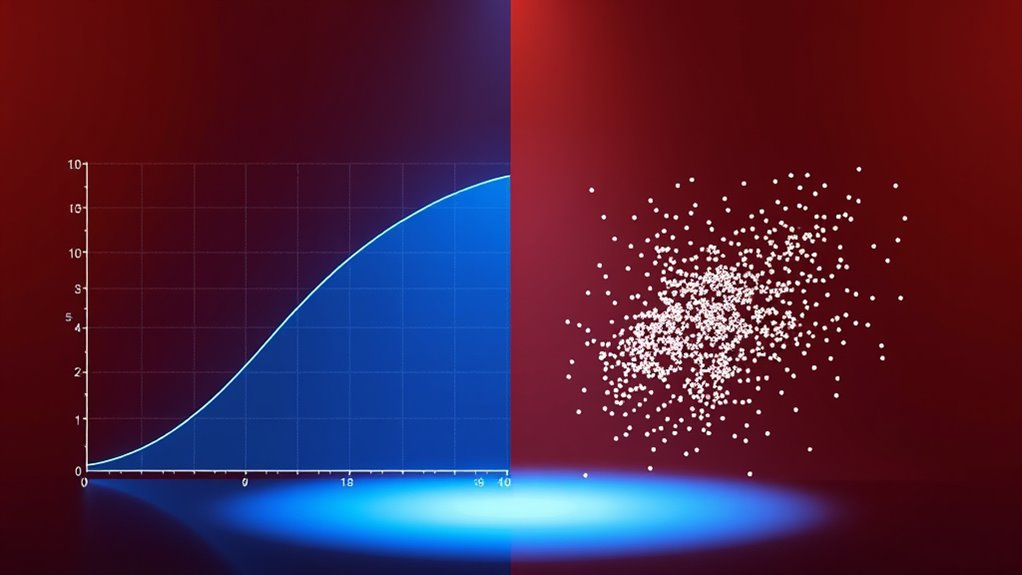

Have you ever wondered how statisticians decide which methods to use for analyzing data? The choice often hinges on understanding the differences between parametric and nonparametric approaches. At the core of this decision are distribution assumptions and model flexibility. Parametric methods require you to assume a specific distribution for your data, like normal or binomial. These assumptions simplify analysis because they let you use well-established formulas and models, but they also mean your results depend heavily on whether your data truly fits those assumptions. If your data deviates from the assumed distribution, your conclusions might be misleading. That’s where model flexibility becomes vital. Parametric models are generally less flexible because they rely on fixed forms; they excel when your data aligns with the assumptions but struggle otherwise. For example, if you’re analyzing data that appears normally distributed, parametric tests like t-tests or ANOVA are efficient and powerful. But if your data is skewed or has outliers, these methods might give inaccurate results, prompting you to consider alternatives. Additionally, understanding the underlying data distribution is crucial for selecting the most appropriate analysis method, which ties into the broader concept of distribution assumptions. Nonparametric methods, on the other hand, don’t require strict distribution assumptions. They’re designed to be more adaptable to various data types and shapes. This flexibility makes them particularly valuable when you’re unsure about the underlying distribution or when your data violates the assumptions of parametric tests. Nonparametric techniques, like the Mann-Whitney U test or Kruskal-Wallis test, rely on the ranks or orderings of data rather than specific distribution parameters. Since they don’t hinge on a predefined distribution, they tend to be more robust against outliers and skewed data. However, this increased model flexibility often comes at a cost: nonparametric methods can be less powerful than parametric ones when the assumptions of the latter are met. That means you might need a larger sample size to detect the same effect or difference. Understanding when to use each approach involves weighing the importance of distribution assumptions against the need for model flexibility. If your data meets the necessary assumptions, parametric methods are usually preferable for their efficiency and precision. But if your data is messy, doesn’t fit a standard distribution, or you want a method that adapts to various data shapes, nonparametric techniques are your best bet. Ultimately, the decision hinges on your specific data set and research question, but having a clear grasp of distribution assumptions and model flexibility will guide you toward the most appropriate analysis method.

Frequently Asked Questions

How Do I Choose Between Parametric and Nonparametric Methods?

You should choose between parametric and nonparametric methods based on your data’s distribution assumptions and flexibility needs. If your data fits a specific distribution and you want more precise estimates, go parametric. But if your data is complex or doesn’t meet assumptions, nonparametric methods offer greater data flexibility without strict distribution requirements. Consider your sample size and the nature of your data to make the best choice.

What Are Common Examples of Parametric Tests?

Think of common parametric tests as the Swiss Army knives of statistics. You might use a t-test to compare two means, an ANOVA for multiple groups, or Pearson’s correlation to measure relationships. These tests rely on distribution assumptions and suit test applications where data follows a normal distribution. They’re powerful when their conditions are met, making your analysis precise and efficient.

When Is a Nonparametric Approach More Appropriate?

You should choose a nonparametric approach when robustness considerations matter, especially if your data doesn’t meet strict distribution assumptions. Nonparametric tests are more appropriate when your sample size is small, data is skewed, or you have outliers, as they don’t rely on normality. This makes them more flexible and reliable under uncertain distribution conditions, ensuring your analysis stays valid even when parametric assumptions are violated.

How Do Sample Size Requirements Differ?

You need a larger sample size for nonparametric tests because they make fewer assumptions, which increases variability. Parametric tests, on the other hand, require smaller sample sizes since they rely on assumptions like normality. When your data violates assumptions or is limited in size, nonparametric methods demand more data to achieve reliable results, whereas parametric methods can be more efficient with smaller samples when assumptions are met.

Can I Combine Parametric and Nonparametric Techniques?

Yes, you can combine parametric and nonparametric techniques to balance model flexibility and robustness. Using hybrid approaches helps you handle assumption violations while maintaining some structure. For example, you might apply a parametric model where assumptions hold and switch to a nonparametric method when they don’t. This flexibility lets you adapt to data complexities, ensuring more reliable results without sacrificing interpretability or risking inaccurate conclusions.

Conclusion

In sum, choosing between parametric and nonparametric methods depends on your data and desires. Remember, parametric provides precision with assumptions, while nonparametric offers flexibility without them. By balancing bias and variance, you boost your analysis. So, decide wisely, plunge deeply, and don’t dodge the details. Embrace the differences, determine your data’s destiny, and develop your understanding. Dive into data with confidence, and let your discoveries drive your decisions!