Support Vector Machines (SVMs) are a way to classify data by finding the best boundary that separates different groups. They aim to maximize the margin, which is the distance between the boundary and the closest data points. This makes the model accurate and robust, even with new data. If your data isn’t perfectly separated, SVMs can handle that too with special techniques. Keep exploring to see how SVMs make complex data easier to understand.

Key Takeaways

- SVMs find the best boundary to separate different groups of data by maximizing the distance from the closest points.

- They aim to create a decision boundary that is as far away as possible from all data points for better accuracy.

- When data isn’t easily separable, SVMs allow some misclassifications and use mathematical tricks to handle complex patterns.

- Kernel tricks help SVMs transform data into higher dimensions, making it easier to separate with a straight line.

- SVMs are powerful for both simple and complex classification tasks, providing robust and accurate predictions.

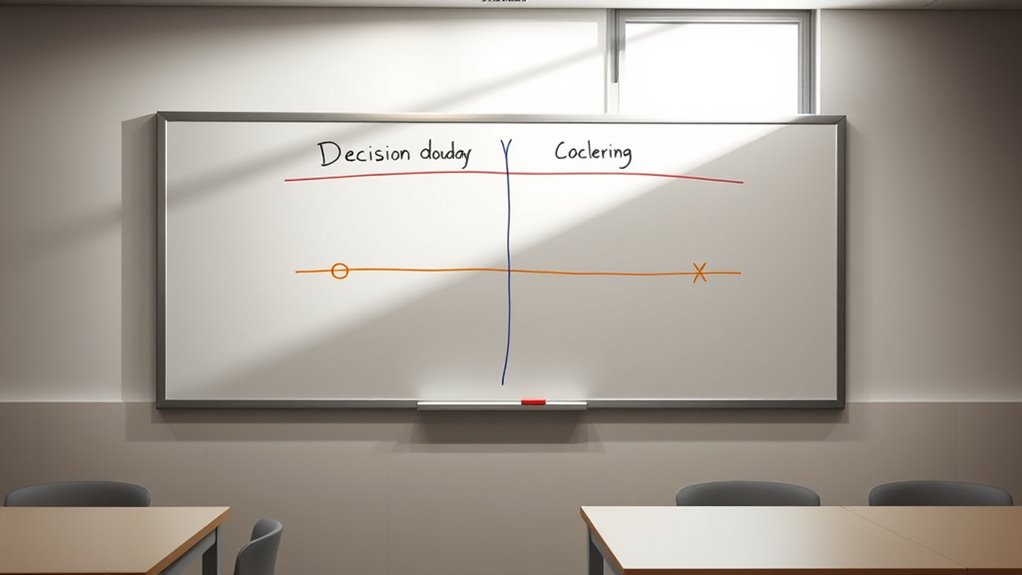

Support Vector Machines (SVMs) are a powerful tool designed to do just that. At their core, SVMs find the best way to separate different groups of data points by drawing a boundary called a hyperplane. The key idea is to maximize the margin—that is, the distance between the hyperplane and the closest data points from each group. By doing this, SVMs aim to create a decision boundary that’s as far away as possible from all data points, which helps improve the model’s accuracy and robustness when making predictions on new data.

The margin optimization process is central to how SVMs work. Think of it like drawing a line in the sand that’s as far away as possible from the nearest data points of each class. The larger the margin, the better the SVM is at generalizing to unseen data. During training, the algorithm adjusts the position and orientation of the hyperplane to maximize this margin. This process involves solving a mathematical optimization problem that balances fitting the current data perfectly with maintaining a margin large enough to handle future uncertainties. When data is linearly separable, this approach works straightforwardly. But in real-world scenarios, data often isn’t perfectly separable, so SVMs introduce slack variables to allow some misclassifications while still prioritizing margin maximization.

Now, what if your data isn’t linearly separable? This is where kernel tricks come into play. Kernel tricks allow you to transform your data into a higher-dimensional space where a linear separation becomes possible. Instead of explicitly mapping every point, kernels compute the relationships between points directly in the transformed space, making the process computationally efficient. This transformation enables the SVM to find a nonlinear decision boundary that separates complex data sets effectively. Popular kernels include polynomial, radial basis function (RBF), and sigmoid, each suited for different types of data patterns. Additionally, understanding performance tuning can help optimize SVM parameters for better results.

In essence, SVMs leverage margin optimization to create a robust boundary, and kernel tricks extend their power to handle complex, nonlinear data. Together, these techniques give SVMs the ability to classify a wide variety of data with high accuracy. Whether your data is simple or intricate, understanding these core concepts helps you grasp why SVMs are such a versatile and reliable machine learning tool, capable of tackling real-world classification challenges with precision.

Frequently Asked Questions

How Do SVMS Handle Multi-Class Classification Problems?

You handle multi-class classification with SVMs by using strategies like one-vs-one or one-vs-all, which break the problem into multiple binary tasks. During hyperparameter tuning, you optimize parameters like the regularization term to improve accuracy. Keep in mind, while SVMs can be effective here, they may reduce model interpretability compared to simpler models, but this trade-off often results in higher precision across multiple classes.

What Are the Common Kernel Functions Used in SVMS?

You’ll find that common kernel functions in SVMs include linear, polynomial, radial basis function (RBF), and sigmoid kernels. These kernels use the kernel trick to perform feature mapping, transforming data into higher-dimensional spaces. This allows SVMs to handle complex, non-linear patterns without explicitly computing the new features. By choosing the right kernel, you can improve your model’s ability to classify data accurately across different types of problems.

How Does Feature Scaling Affect SVM Performance?

They say, “A chain is only as strong as its weakest link,” and the same applies to SVMs. When you perform data normalization through feature scaling, you guarantee that all features contribute equally, preventing some from dominating due to their scale. This improves SVM performance by highlighting feature importance accurately and helping the model find the best separating hyperplane more efficiently. Skipping this step can lead to poor classification results.

Can SVMS Be Used for Regression Tasks?

Yes, SVMs can be used for regression tasks, known as Support Vector Regression (SVR). You’ll want to focus on hyperparameter tuning to improve accuracy, especially adjusting parameters like epsilon and kernel type. Keep in mind, while SVR offers good predictive power, it can be less interpretable than simpler models, so consider your need for model interpretability when choosing SVM for regression.

What Are the Main Limitations of Support Vector Machines?

Support vector machines are powerful, but they do have their Achilles’ heel. You might face overfitting issues, especially with noisy data or complex features, causing your model to perform poorly on new data. Scalability challenges also loom large; SVMs can become painfully slow as your dataset grows, making them less practical for big data problems. So, while they’re effective, these limitations can hold you back if you’re not careful.

Conclusion

Think of Support Vector Machines as a sharp sword cutting through the noise, carving out the clearest path to distinguish your data. They’re like skilled guides, drawing precise boundaries where chaos threatens to reign. With SVMs, you’re wielding a powerful tool that slices through complexity, making sense of even the messiest data. When you understand and harness their strength, you’re steering confidently through the data jungle, turning chaos into clarity with the finesse of a master navigator.